google seeming solved efficient attention

google chatbot shocks waitress by perfectly retrieving needle from haystack at shockingly low cost

[Epistemic status - speculative, but sort of grounded, might be wrong - don’t take too seriously]

Alternative title: why Gemini 3 pro gets to be so big

Bit more technical than usual but want to try out writing technical articles, thank you to @bycloud on twitter for giving me the idea to write about this. It’s really his discovery. He makes excellent videos on the state of LLM progress, I highly suggest checking him out.

Traditional attention in transformers is O(n^2), which doesn’t scale well.

So, there’s been a big race towards what we call subquadratic attention or even linear attention. It’s a very active area of research, with Kimi spearheading Kimi linear attention and Deepseek inventing Deepseek Sparse Attention (DSA). Both of these (chinese) labs publish their findings. This is not something OpenAI or Anthropic or Google does, they would rather keep this a secret. More on that later.

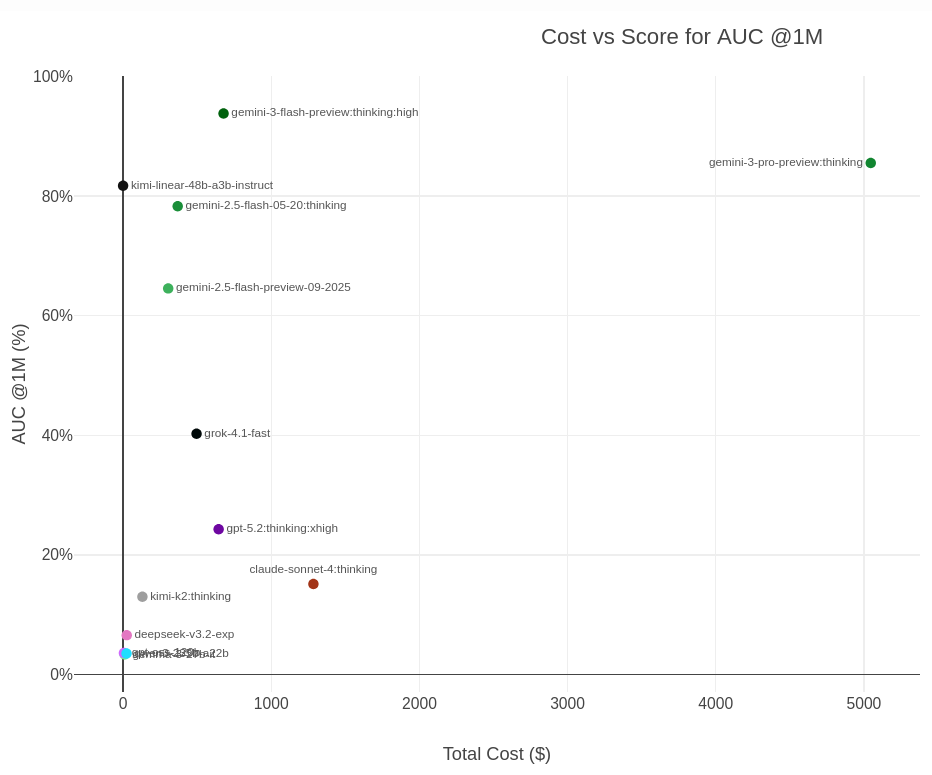

So let’s say we want to test this right? Test for how good your attention really is? The closest we can get is a little benchmark where the models are supposed to retrieve “a needle out of a haystack”. Stuff like a big story of 1 million tokens and they are supposed to retrieve a fact from it

So now, let’s see how most SOTA models perform shall we? The X-axis is cost, the Y-axis is success rate.

Haha, what the fuck! Look at how good every Google model is? What the fuck are they doing? this is some black fucking magic! I strongly suspect they’ve sort of cracked good sub-quadratic attention. They’re just mogging everyone!1

The exception to this is Kimi linear, the chinese lab who is experimenting with linear attention. But the problem is that they found their linear attention to be notoriously bad at knowledge tasks, which Gemini 3 flash is super good at. Gemini 3 pro and flash perform spectacularly on benchmarks and are insanely cheap compared to the competition. For context, Gemini 3 flash’s biggest competitor is likely opus 4.5, which is literally 10 times more expensive.2

Google does not have significantly more compute than their competitors but I get more free Gemini 3 pro usage than I get paid opus 4.5 usage. This is kind of bizarre, you can subsidise a userbase, but by how much? Inference is super duper expensive. Need I remind you that is OpenAI literally losing money on $200/month subscriptions?

This is extra-puzzling when you consider that our best available heuristics point to Gemini 3 pro being an absolutely huge model. So how the fuck is google serving this to billions? For free?

Nothing seems to explain this other than Google seemingly having figured out subquadratic attention. Or some other paradigm shift on the architectural level.

Except… Maybe, there is…?

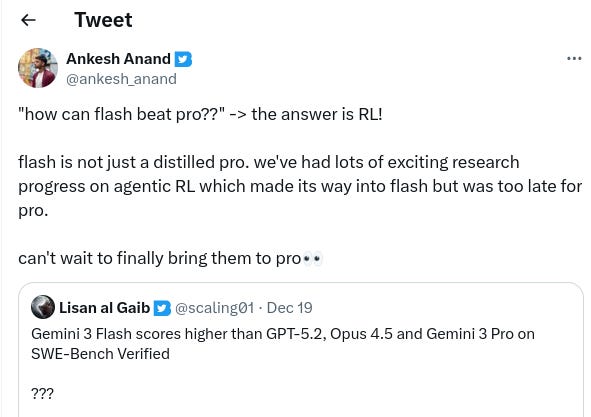

If you ask google engineers: “why is flash 3 so cracked” they will give you a different answer. It’s scaling RL.

And this definitely does play a role. If you look at that graph again, do you see the difference between 2.5 and 2.5 flash preview? Do you see how they get a better score for less money? That’s very likely the exact same underlying architecture. Basically all improvements then from 2.5 flash preview to 2.5 flash can be attributed to RL. It doesn’t seem entirely impossible that they figured out a way to build really good RL environments and use basically the same attention mechanism that Kimi does.

But again, this does not answer how they got it to be so good at other tasks! Maybe kimi sucks at RL, and current linear attention can already do this? It’s possible!

And especially Anthropic seems to be really behind here (to be fair, opus isn’t on here). scoring horribly on these benchmarks, and being notorious for “compacting the conversation“ every X tokens.3 Square that with Opus 4.5 being the best model based on vibes for a lot of people, and my argument strengthens.

The full picture is admittedly more complex, a lot of models use some sort of alternating quadratic and subquadratic attention, so it doesn’t have to be a single algorithm, it could just be a clever way to combine these.

So yeah, google is clearly cooking something.

The death of open research

Whatever it is, it really saddens me I will never get to find out. Academic research had its problems with publishing in journals and such and ease of access. This is the downside of frontier research happening in industry. But there is knowledge on the inside. Knowledge that allows us to build things that more and more seem to resemble some form of general intelligence. And I do not have access to that knowledge, and that really stings. And this problem is only getting worse for frontier LLM research. Thank you China!

So to my friends, who asked me at a party, why has OpenAI seemingly fallen off? Why do researchers literally get billion dollar contracts? It’s because bullshit like this. If you can find good subquadratic attention algorithms, or build the right RL environment, you can hold up the entire stock market.

The future

This all ties into why I feel pretty bullish on the short-term future of LLMs:

RL research is keeping pace, and there’s little reason to believe it will stop, there are a lot of good RL environments to make

Gemini 3 Pro didn’t even get the RL that flash did, and it’s already crazy good! Google already seems to be testing 3.5 internally apparently.

Datacenter compute is set to roughly double every year.

We keep finding architectures that can do more with less.

If you think AI progress would stall, hard, all 3 of these would have to suddenly not be true anymore, I just do not see this happening. Go read Bentham’s Bulldog or the much longer forethought report on the subject if you’re interested.

Strap in, the next few years are going to be wild.

Note this is generally not really a benchmark you just kinda train for and get good at like you can do with math etc… Models kind of intrinsicly learn this behavior and it’s mostly up to your architecture if they are even capable of doing it

openrouter api input credits. (anthropic generally more expensive for this but point still stands: noone is getting free opus 4.5 usage, everyone is getting nearly unlimited 3 flash usage)

Note: maybe this is just because they notice performance degrading if the context window is full of junk, it isn’t logically implied, but it is curious to note nonetheless.

speculation on proprietary secrets is one of my fav blog post genres, & i'm optimistic about us getting to see how accurate this was at some point